Zeeschuimer (“sea foamer”) is a web browser extension from the Digital Methods Initiative in Amsterdam that enables you to collect data while you are browsing social media sites for research and analysis.

It currently works for platforms such as TikTok, Instagram, Twitter and LinkedIn and provides an ndjson file which can be imported into the open source 4CAT: Capture and Analysis Toolkit for analysis.

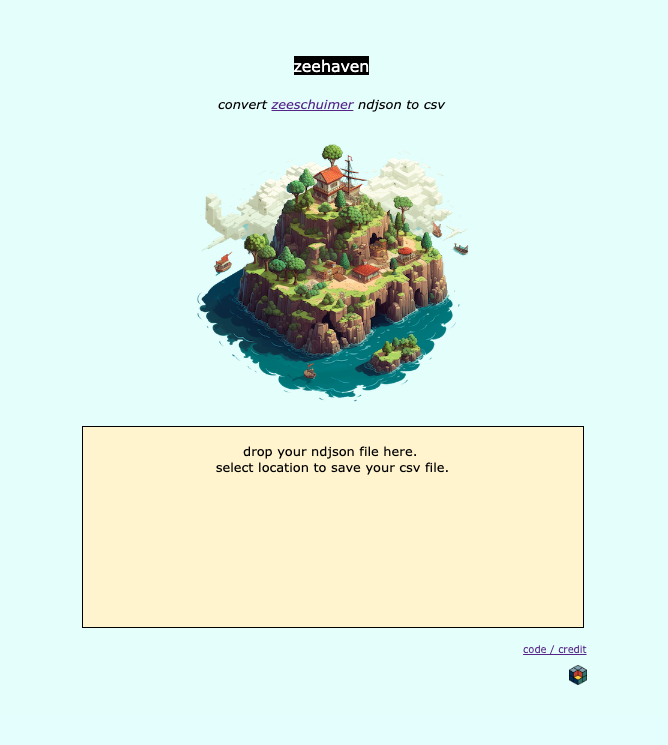

To make data gathered with Zeeschuimer more accessible for for researchers, reporters, students, and others to work with, we’ve created zeehaven (“sea port”) – a tiny web-based tool to convert ndjson into csv format, which is easier to explore with spreadsheets as well as common data analysis and visualisation software.

Drag and drop a ndjson file into the “sea port” and the tool will prompt you to save a csv file. ✨📦✨

zeehaven was created as a collaboration between the Centre for Interdisciplinary Methodologies, University of Warwick and Department of Digital Humanities, King’s College London – and grew out of a series of Public Data Lab workshops to exchange digital methods teaching resources earlier this year.

You can find the tool here and the code here. All data is converted locally.