There will be a launch event for the Convergence special issue on critical technical practice(s) in digital research on Wednesday 10th July, 2-4pm (CEST). This will include an introduction to the special issue, brief presentations from several special issue contributors, followed by discussion about possibilities and next steps. You can register here.

Author: Public Data Lab

Critical Technical Practice(s) in Digital Research- special issue in Convergence

A special issue on “Critical Technical Practice(s) in Digital Research” co-edited by Public Data Lab members Daniela van Geenen, Karin van Es and Jonathan W. Y. Gray has been published in Convergence: https://journals.sagepub.com/toc/cona/30/1.

The special issue explores the pluralisation of “critical technical practice”, starting from its early formulations by Philip Agre in the context of AI research and development to the many ways in which it has resonated and been taken up by different publications, projects, groups, and communities of practice, and what it has come to mean. This special issue serves as an invitation to reconsider what it means to use this notion drawing on a wider body of work, including beyond Agre.

A special issue introduction explores critical technical practices according to (1) Agre, (2) indexed research, and (3) contributors to the special issue, before concluding with questions and considerations for those interested in working with this notion.

The issue features contributions on machine learning, digital methods, art-based interventions, one-click network trouble, web page snapshotting, social media tool-making, sensory media, supercuts, climate futures and more. Contributors include Tatjana Seitz & Sam Hind; Michael Dieter; Jean-Marie John-Mathews, Robin De Mourat, Donato Ricci & Maxime Crépel; Anders Koed Madsen; Winnie Soon & Pablo Velasco; Mathieu Jacomy & Anders Munk; Jessica Ogden, Edward Summers & Shawn Walker; Urszula Pawlicka-Deger; Simon Hirsbrunner, Michael Tebbe & Claudia Müller-Birn; Bernhard Rieder, Erik Borra & Stijn Peters; Carolin Gerlitz, Fernando van der Vlist & Jason Chao; Daniel Chavez Heras; and Sabine Niederer & Natalia Sanchez Querubin.

There will be a hybrid event to launch the special issue on 10 July, 2-4 pm CEST.

Links to the articles and our evolving library can be found here:

https://publicdatalab.org/projects/pluralising-critical-technical-practices/.

If you’re interested in critical technical practices and you’d like to follow work in this area, we’ve set up a new mailing list here for sharing projects, publications, events and other activities: https://jiscmail.ac.uk/CRITICAL-TECHNICAL-PRACTICES

Image credit: “All Gone Tarot Deck” co-created by Carlo De Gaetano, Natalia Sánchez Querubín, Sabine Niederer and the Visual Methodologies Collective from Climate futures: Machine learning from cli-fi, one of the special issue articles.

Public Data Lab talk at University of Bucharest

Public Data Lab co-founders Liliana Bounegru and Jonathan Gray will be giving a talk at the Center of Excellence in Image Studies (CESI), University of Bucharest on 12th April 2024. Further details in the poster below.

New article on cross-platform bot studies published in special issue about visual methods

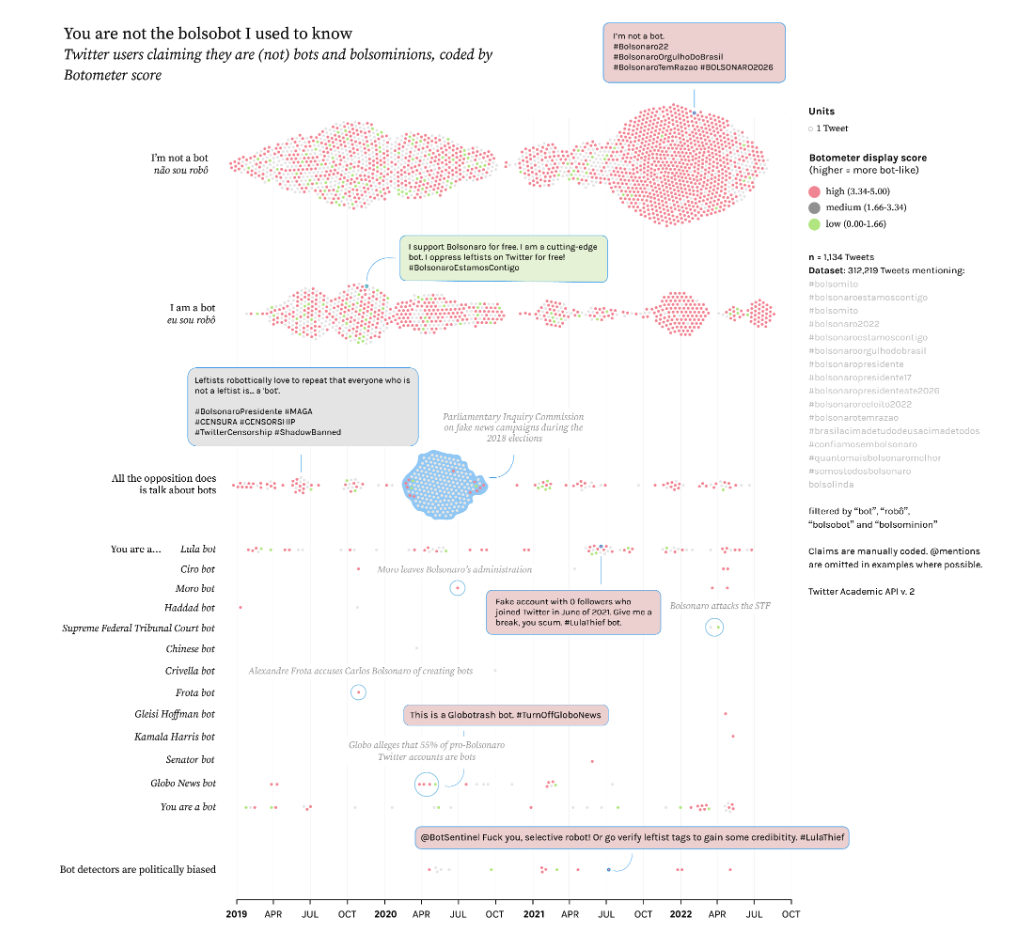

An article on “Quali-quanti visual methods and political bots: A cross-platform study of pro- & anti- bolsobots” has just been published in the special issue “Methods in Visual Politics and Protest” of the Journal of Digital Social Research, co-authored by Public Data Lab associates Janna Joceli Omena, Thais Lobo, Giulia Tucci, Elias Bitencourt, Emillie de Keulenaar, Francisco W. Kerche, Jason Chao, Marius Liedtke, Mengying Li, Maria Luiza Paschoal, and Ilya Lavrov.

The article provides methodological contributions for interpreting bot-associated image collections and textual content across Instagram, TikTok and Twitter/X, building on a series of data sprints conducted as part of the Public Data Lab “Profiling Bolsobot Networks” project.

The full text is available open access here. Further details and links can be found at the project page. Below is the abstract:

Computational social science research on automated social media accounts, colloquially dubbed “bots”, has tended to rely on binary verification methods to detect bot operations on social media. Typically focused on textual data from Twitter (now rebranded as “X”), these methods are prone to finding false positives and failing to understand the subtler ways in which bots operate over time and in particular contexts. This research paper brings methodological contributions to such studies, focusing on what it calls “bolsobots” in Brazilian social media. Named after former Brazilian President Jair Bolsonaro, the bolsobots refer to the extensive and skilful usage of partial or fully automated accounts by marketing teams, hackers, activists or campaign supporters. These accounts leverage organic online political culture to sway public opinion for or against policies, opposition figures, or Bolsonaro himself. Drawing on empirical case studies, this paper implements quali-quanti visual methods to operationalise specific techniques for interpreting bot-associated image collections and textual content across Instagram, TikTok and Twitter/X. To unveil the modus operandi of bolsobots, we map the networks of users they follow (“following networks”), explore the visual-textual content they post, and observe the strategies they deploy to adapt to platform content moderation. Such analyses tackle methodological challenges inherent in bot studies by employing three key strategies: 1) designing context-sensitive queries and curating datasets with platforms’ interfaces and search engines to mitigate the limitations of bot scoring detectors, 2) engaging qualitatively with data visualisations to understand the vernaculars of bots, and 3) adopting a non-binary analysis framework that contextualises bots within their socio-technical environments. By acknowledging the intricate interplay between bots, user and platform cultures, this paper contributes to method innovation on bot studies and emerging quali-quanti visual methods literature.

zeehaven – a tiny tool to convert data for social media research

Zeeschuimer (“sea foamer”) is a web browser extension from the Digital Methods Initiative in Amsterdam that enables you to collect data while you are browsing social media sites for research and analysis.

It currently works for platforms such as TikTok, Instagram, Twitter and LinkedIn and provides an ndjson file which can be imported into the open source 4CAT: Capture and Analysis Toolkit for analysis.

To make data gathered with Zeeschuimer more accessible for for researchers, reporters, students, and others to work with, we’ve created zeehaven (“sea port”) – a tiny web-based tool to convert ndjson into csv format, which is easier to explore with spreadsheets as well as common data analysis and visualisation software.

Drag and drop a ndjson file into the “sea port” and the tool will prompt you to save a csv file. ✨📦✨

zeehaven was created as a collaboration between the Centre for Interdisciplinary Methodologies, University of Warwick and Department of Digital Humanities, King’s College London – and grew out of a series of Public Data Lab workshops to exchange digital methods teaching resources earlier this year.

You can find the tool here and the code here. All data is converted locally.

New article on GitHub and the platformisation of software development

An article on “The platformisation of software development: Connective coding and platform vernaculars on GitHub” by Liliana Bounegru has just been published in Convergence: The International Journal of Research into New Media Technologies.

The article is accompanied by a set of free tools for researching Github co-developed by Liliana with the Digital Methods Initiative – including to:

- Extract the meta-data of organizations on Github

- Extract the meta-data of Github repositories

- Scrape Github for forks of projects

- Scrape Github for user interactions and user to repository relations

- Extract meta-data about users on Github

- Find out which users contributed source code to Github repositories

The article is available open access here. The abstract is copied below.

This article contributes to recent scholarship on platform, software and media studies by critically engaging with the ‘social coding’ platform GitHub, one of the most prominent actors in the online proprietary and F/OSS (free and/or open-source software) code hosting space. It examines the platformisation of software and project development on GitHub by combining institutional and cultural analysis. The institutional analysis focuses on critically examining the platform from a material-economic perspective to understand how it configures contemporary software and project development work. It proposes the concept of ‘connective coding’ to characterise how software intermediaries such as GitHub configure, valorise and capitalise on public repositories, developer and organisation profiles. This institutional perspective is complemented by a case study analysing cultural practices mediated by the platform. The case study examines the platform vernaculars of news media and journalism initiatives highlighted by Source, a key publication in the newsroom software development space, and how GitHub modulates visibility in this space. It finds that the high-visibility platform vernacular of this news media and journalism space is dominated by a mix of established actors such as the New York Times, the Guardian and Bloomberg, as well as more recent actors and initiatives such as ProPublica and Document Cloud. This high-visibility news media and journalism platform vernacular is characterised by multiple F/OSS and F/OSS-inspired practices and styles. Finally, by contrast, low-visibility public repositories in this space may be seen as indicative of GitHub’s role in facilitating various kinds of ‘post-F/OSS’ software development cultures.

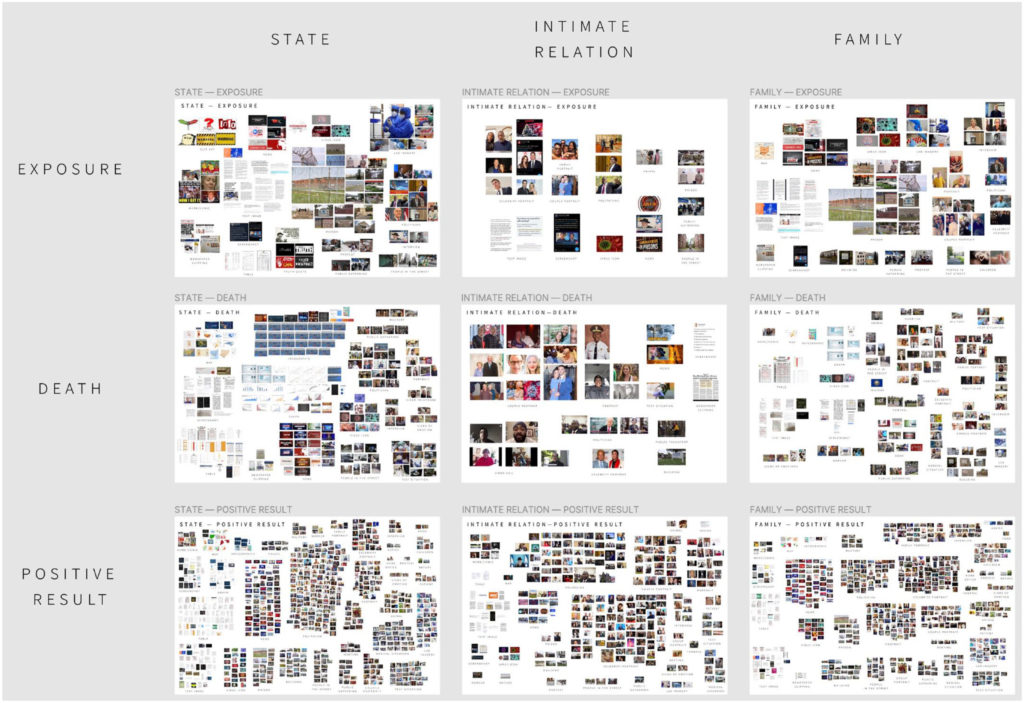

Article on COVID-19 testing situations on Twitter published in Social Media + Society

An article on “Testing and Not Testing for Coronavirus on Twitter: Surfacing Testing Situations Across Scales With Interpretative Methods” has just been published in Social Media + Society, co-authored by Noortje Marres, Gabriele Colombo, Liliana Bounegru, Jonathan W. Y. Gray, Carolin Gerlitz and James Tripp, building on a series of workshops in Warwick, Amsterdam, St Gallen and Siegen.

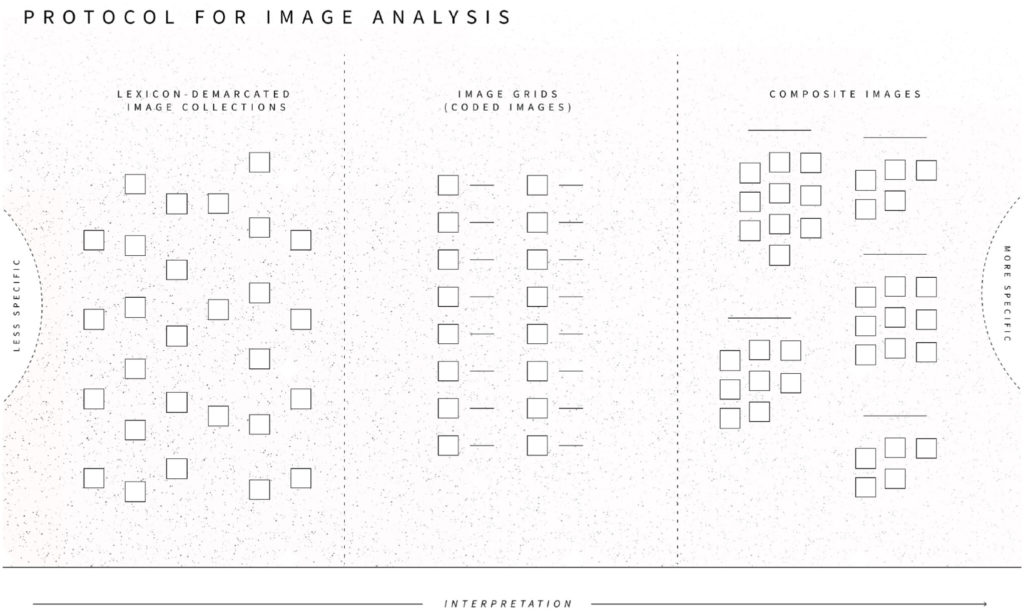

The article explores testing situations – moments in which it is no longer possible to go on in the usual way – across scales during the COVID-19 pandemic through interpretive querying and sub-setting of Twitter data (“data teasing”), together with situational image analysis.

The full text is available open access here. Further details and links can be found at this project page. The abstract and reference are copied below.

How was testing—and not testing—for coronavirus articulated as a testing situation on social media in the Spring of 2020? Our study examines everyday situations of Covid-19 testing by analyzing a large corpus of Twitter data collected during the first 2 months of the pandemic. Adopting a sociological definition of testing situations, as moments in which it is no longer possible to go on in the usual way, we show how social media analysis can be used to surface a range of such situations across scales, from the individual to the societal. Practicing a form of large-scale data exploration we call “interpretative querying” within the framework of situational analysis, we delineated two types of coronavirus testing situations: those involving locations of testing and those involving relations. Using lexicon analysis and composite image analysis, we then determined what composes the two types of testing situations on Twitter during the relevant period. Our analysis shows that contrary to the focus on individual responsibility in UK government discourse on Covid-19 testing, English-language Twitter reporting on coronavirus testing at the time thematized collective relations. By a variety of means, including in-memoriam portraits and infographics, this discourse rendered explicit challenges to societal relations and arrangements arising from situations of testing and not testing for Covid-19 and highlighted the multifaceted ways in which situations of corona testing amplified asymmetrical distributions of harms and benefits between different social groupings, and between citizens and state, during the first months of the pandemic.

Marres, N., Colombo, G., Bounegru, L., Gray, J. W. Y., Gerlitz, C., & Tripp, J. (2023). Testing and Not Testing for Coronavirus on Twitter: Surfacing Testing Situations Across Scales With Interpretative Methods. Social Media + Society, 9(3). https://doi.org/10.1177/20563051231196538

Working paper on “Testing ‘AI’: Do we have a situation?”

A new working paper on “Testing ‘AI’: Do We Have a Situation?” based on conversation between Noortje Marres and Philippe Sormani has just been published as part of a working paper series from “Media of Cooperation” at the University of Siegen. The paper can be found here and further details are copied below.

The new publication »Testing ‘AI’: Do We Have a Situation?« of the Working Paper Series (No. 28, June 2023) is based on the transcription of a recent conversation between the authors Noortje Marres und Philippe Sormani regarding current instances of the real-world testing of “AI” and the “situations” they have given rise to or as the case may be not. The conversation took place online on the 25th of May 2022 as part of the Lecture Series “Testing Infrastructures” organized by the Collaborative Research Center (CRC) 1187 “Media of Cooperation” at the University of Siegen Germany. This working paper is an elaborated version of this conversation.

In their conversation Marres and Sormani discuss the social implications of AI based on three questions: First they return to a classic critique that sociologists and anthropologists have levelled at AI namely the claim that the ontology and epistemology underlying AI development is rationalist and individualist and as such is marked by blind spots for the social and in particular situated or situational embedding of AI (Suchman, 1987, 2007; Star, 1989). Secondly they delve into the issue of whether and how social studies of technology can account for AI testing in real-world settings in situational terms. And thirdly they ask the question of what does this tell us about possible tensions and alignments between different “definitions of the situation” assumed in social studies engineering and computer science in relation to AI. Finally they discuss the ramifications for their methodological commitment to “the situation” in the social study of AI.

Noortje Marres is Professor of Science Technolpgy and Society at the Centre for Interdisciplinary Methodology at the University of Warwick and Guest Professor at Media of Cooperation Collaborative Research Centre at the University of Siegen. She published two monographs Material Participation (2012) and Digital Sociology (2017).

Philippe Sormani is Senior Researcher and Co-Director of the Science and Technology Studies Lab at the University of Lausanne. Drawing on and developing ethnomethodology he has published on experimentation in and across different fields of activity ranging from experimental physics (in Re- specifying Lab Ethnography, 2014) to artistic experiments (in Practicing Art/Science, 2019).

The paper »Testing ‘AI’: Do We Have a Situation?« is published as part of the Working Paper Series of the CRC 1187 which promotes inter- and transdisciplinary media research and provides an avenue for rapid publication and dissemination of ongoing research located at or associated with the CRC. The purpose of the series is to circulate in-progress research to the wider research community beyond the CRC. All Working Papers are accessible via the website.

Image caption: Ghost #8 (Memories of a mise en abîme with a bare back in front of an untamable tentacular screen), experimenting with OpenAI Dall-E, Maria Guta and Lauren Huret (Iris), 2022. (Courtesy of the artists)

forestscapes listening lab at re:publica 23, Berlin, 5-7th June

As part of the forestscapes project we’re organising a listening lab at re:publica 23, the digital society festival in Berlin, 5-7th June 2023:

How can generative soundscape composition enable different perspectives on forests in an era of planetary crisis? The forestscapes listening lab explores how sound can serve as a medium for collective inquiry into forests as living cultural landscapes.

The soundscapes are composed with folders of sound from different sources, including field recordings from researchers, sound artists and forest practitioners, as well as online sounds from the web, social media and sound archives. They are composed using custom scripts with the open source supercollider software as well as open source norns device, a “sound machine for the exploration of time and space”.

The re:publica installation will include soundscapes from workshops in London and Berlin – including some new pieces from the Environmental Data, Media, and the Humanities hackathon last week.

Cross-posted from jonathangray.org.

Network exploration on the web: an interview with Gephi Lite

Following the recent release of Gephi Lite, an open-source web-based visual network exploration tool, we interviewed its developers about the background of the project, what they’ve done and future plans…

What is Gephi Lite?

Gephi Lite can actually be defined in two ways. The first definition follows the name we chose: Gephi Lite is a lighter version of the Gephi desktop software, targeting users who need to work on smaller networks with less complex operations in mind.

The second definition is more focused on the technical context: Gephi Lite is a serverless web application to drive visual network analysis. There are no more requirements than an internet connection and a modern web browser.

Continue reading