How can screenshots trouble our understanding of AI?

To explore this we’re launching a call for screenshots as part of a research collaboration co-organised by the Digital Futures Institute’s Centre for Digital Culture and Centre for Attention Studies at King’s College London, the médialab at Sciences Po, Paris and the Public Data Lab.

We’d be grateful for your help in sharing this call:

Further details can be found here and copied below.

[- – – – – – – ✄ – – – snip – – – – – – – – – -]

troubling AI: a call for screenshots 📸

How can screenshots trouble our understanding of AI?

This “call for screenshots” invites you to explore this question by sharing a screenshot that you have created, or that someone has shared with you, of an interaction with AI that you find troubling, with a short statement on your interpretation of the image and circumstances of how you got it.

The screenshot is perhaps one of today’s most familiar and accessible modes of data capture. With regard to Al, screenshots can capture moments when situational, temporary and emergent aspects of interactions are foregrounded over behavioural patterning. They also have a ‘social life’: we share them with each other with various social and political intentions and commitments.

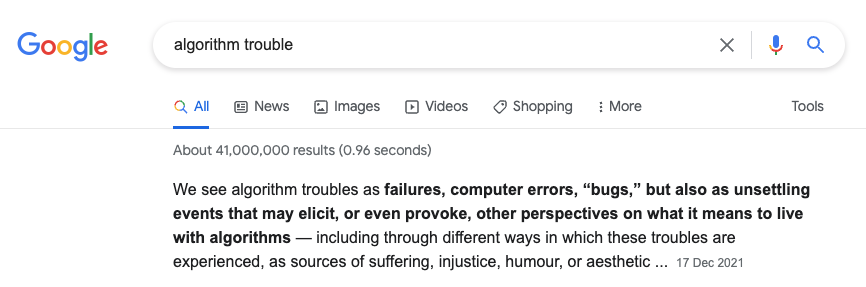

Screenshots have accordingly become a prominent method for documenting and sharing AI’s injustices and other AI troubles – from researchers studying racist search results to customers capturing swearing chatbots, from artists exploring algorithmic culture to social media users publicising bias.

With this call, we are aiming to build a collective picture of AI’s weirdness, strangeness and uncanniness, and how screenshotting can open up possibilities for collectivising troubles and concerns about AI.

This call invites screenshots of interactions with AI inspired by these examples and inquiries, accompanied by a few words about what is troubling for you about those interactions. You are invited to interpret this call in your own way: we want to know what you perceive to be a ‘troubling’ screenshot and why.

Please send us mobile phone screenshots, laptop or desktop screen captures, or other forms of grabbing content from a screen, including videos or other types of screen recordings, through the form below (which can also be found here) by 15th November 2024.

Your images will be featured in an online publication and workshop (with your permission and appropriate credit), co-organised by the Digital Futures Institute’s Centre for Digital Culture and Centre for Attention Studies at King’s College London, the médialab at Sciences Po, Paris and the Public Data Lab.

Joanna Zylinska

Tommy Shaffer Shane

Axel Meunier

Jonathan W. Y. Gray