Public Data Lab co-founders Liliana Bounegru and Jonathan Gray will be giving a talk at the Center of Excellence in Image Studies (CESI), University of Bucharest on 12th April 2024. Further details in the poster below.

Public Data Lab co-founders Liliana Bounegru and Jonathan Gray will be giving a talk at the Center of Excellence in Image Studies (CESI), University of Bucharest on 12th April 2024. Further details in the poster below.

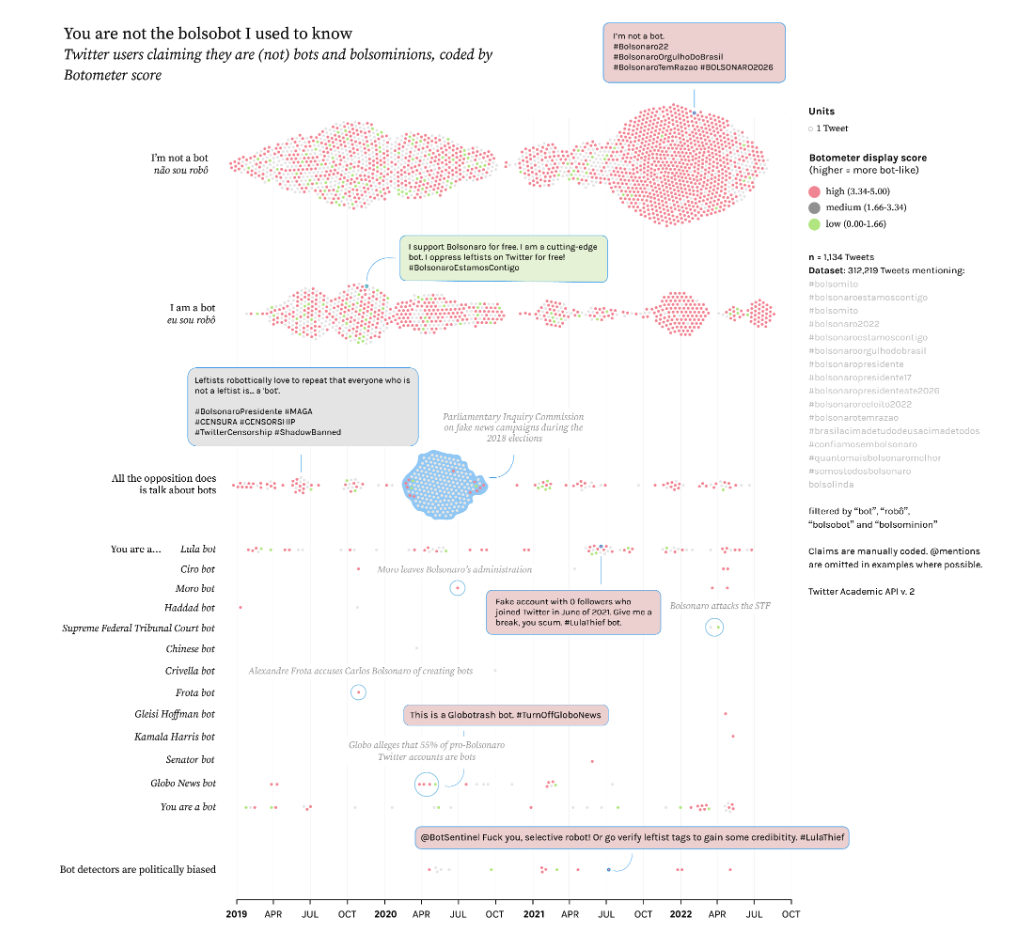

An article on “Quali-quanti visual methods and political bots: A cross-platform study of pro- & anti- bolsobots” has just been published in the special issue “Methods in Visual Politics and Protest” of the Journal of Digital Social Research, co-authored by Public Data Lab associates Janna Joceli Omena, Thais Lobo, Giulia Tucci, Elias Bitencourt, Emillie de Keulenaar, Francisco W. Kerche, Jason Chao, Marius Liedtke, Mengying Li, Maria Luiza Paschoal, and Ilya Lavrov.

The article provides methodological contributions for interpreting bot-associated image collections and textual content across Instagram, TikTok and Twitter/X, building on a series of data sprints conducted as part of the Public Data Lab “Profiling Bolsobot Networks” project.

The full text is available open access here. Further details and links can be found at the project page. Below is the abstract:

Computational social science research on automated social media accounts, colloquially dubbed “bots”, has tended to rely on binary verification methods to detect bot operations on social media. Typically focused on textual data from Twitter (now rebranded as “X”), these methods are prone to finding false positives and failing to understand the subtler ways in which bots operate over time and in particular contexts. This research paper brings methodological contributions to such studies, focusing on what it calls “bolsobots” in Brazilian social media. Named after former Brazilian President Jair Bolsonaro, the bolsobots refer to the extensive and skilful usage of partial or fully automated accounts by marketing teams, hackers, activists or campaign supporters. These accounts leverage organic online political culture to sway public opinion for or against policies, opposition figures, or Bolsonaro himself. Drawing on empirical case studies, this paper implements quali-quanti visual methods to operationalise specific techniques for interpreting bot-associated image collections and textual content across Instagram, TikTok and Twitter/X. To unveil the modus operandi of bolsobots, we map the networks of users they follow (“following networks”), explore the visual-textual content they post, and observe the strategies they deploy to adapt to platform content moderation. Such analyses tackle methodological challenges inherent in bot studies by employing three key strategies: 1) designing context-sensitive queries and curating datasets with platforms’ interfaces and search engines to mitigate the limitations of bot scoring detectors, 2) engaging qualitatively with data visualisations to understand the vernaculars of bots, and 3) adopting a non-binary analysis framework that contextualises bots within their socio-technical environments. By acknowledging the intricate interplay between bots, user and platform cultures, this paper contributes to method innovation on bot studies and emerging quali-quanti visual methods literature.

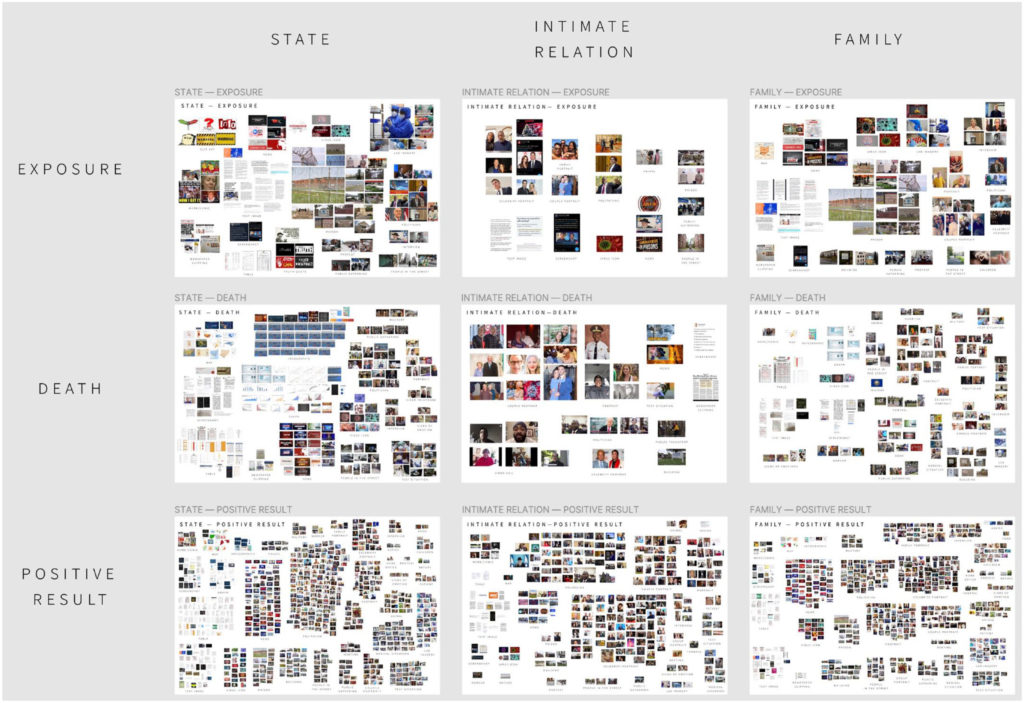

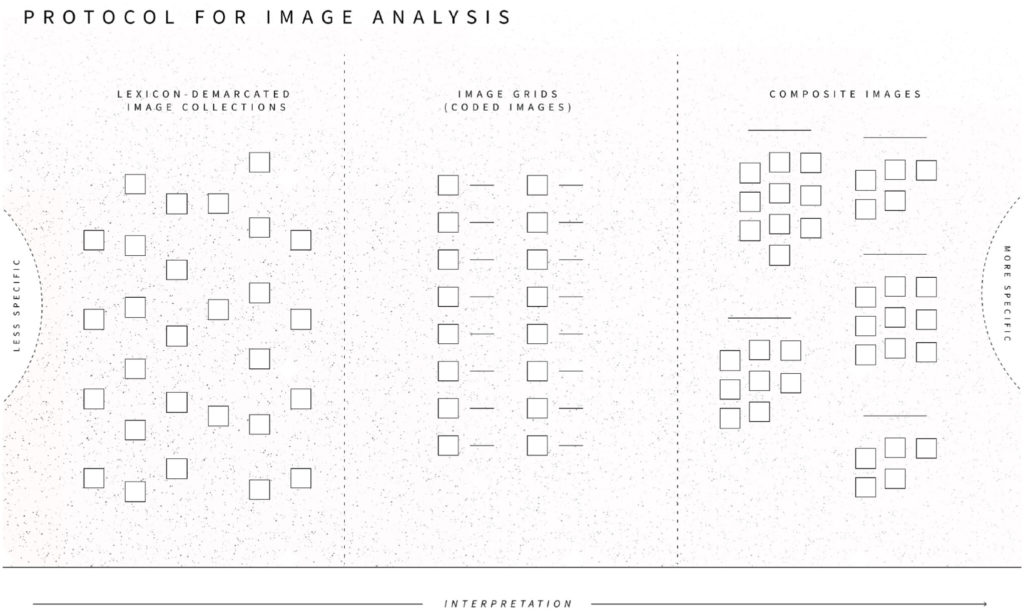

An article on “Testing and Not Testing for Coronavirus on Twitter: Surfacing Testing Situations Across Scales With Interpretative Methods” has just been published in Social Media + Society, co-authored by Noortje Marres, Gabriele Colombo, Liliana Bounegru, Jonathan W. Y. Gray, Carolin Gerlitz and James Tripp, building on a series of workshops in Warwick, Amsterdam, St Gallen and Siegen.

The article explores testing situations – moments in which it is no longer possible to go on in the usual way – across scales during the COVID-19 pandemic through interpretive querying and sub-setting of Twitter data (“data teasing”), together with situational image analysis.

The full text is available open access here. Further details and links can be found at this project page. The abstract and reference are copied below.

How was testing—and not testing—for coronavirus articulated as a testing situation on social media in the Spring of 2020? Our study examines everyday situations of Covid-19 testing by analyzing a large corpus of Twitter data collected during the first 2 months of the pandemic. Adopting a sociological definition of testing situations, as moments in which it is no longer possible to go on in the usual way, we show how social media analysis can be used to surface a range of such situations across scales, from the individual to the societal. Practicing a form of large-scale data exploration we call “interpretative querying” within the framework of situational analysis, we delineated two types of coronavirus testing situations: those involving locations of testing and those involving relations. Using lexicon analysis and composite image analysis, we then determined what composes the two types of testing situations on Twitter during the relevant period. Our analysis shows that contrary to the focus on individual responsibility in UK government discourse on Covid-19 testing, English-language Twitter reporting on coronavirus testing at the time thematized collective relations. By a variety of means, including in-memoriam portraits and infographics, this discourse rendered explicit challenges to societal relations and arrangements arising from situations of testing and not testing for Covid-19 and highlighted the multifaceted ways in which situations of corona testing amplified asymmetrical distributions of harms and benefits between different social groupings, and between citizens and state, during the first months of the pandemic.

Marres, N., Colombo, G., Bounegru, L., Gray, J. W. Y., Gerlitz, C., & Tripp, J. (2023). Testing and Not Testing for Coronavirus on Twitter: Surfacing Testing Situations Across Scales With Interpretative Methods. Social Media + Society, 9(3). https://doi.org/10.1177/20563051231196538

Social Studies of Science has just published “Seven moments with Bruno Latour” by Noortje Marres, Professor in Science, Technology and Society at the Centre for Interdisciplinary Methodologies (University of Warwick) and founding member of the Public Data Lab.

The piece moves across memories, conversations and encounters from 1999 to 2022, situating explorations of issue mapping, controversy mapping, hyperlink analysis, ecological politics, feminist science and technology studies, modes of existence, protocols for collective inquiry, and arts-based methods.

An excerpt on “Limburg, 1999”:

It is a rainy afternoon in Limburg, in the south of the Netherlands. I have taken a local train from Maastricht to Kerkrade to visit Rolduc, a Catholic abbey that also hosts academic conferences, and where the Dutch Graduate School for Science, Technology and Modern Culture (WTMC) is holding a meeting. I have a poster with me, a map of the Genetically Modified Food debate on the Web, a circle of nodes representing the websites of organizations that take positions on the issue of GM Foods, and the hyperlinks that connect them. I made this poster with colleagues at the Jan van Eyck Academy, a post-graduate art school in Maastricht, where am working as a theorist-in-residence in the Design Department, as part of a team led by Richard Rogers, the media scholar, to develop digital methods of issue mapping.

It is wet and windy when I arrive in Kerkrade, and I approach the old, tall buildings of Rolduc abbey with what I can only call trepidation. Bruno has invited me to show our poster at the WTMC conference, but I am not at all sure that this was a good idea. I am not even a PhD student, and am based in an art school. I am a stranger. Fortunately, by the time I walk into the conference the poster session is about to start, and I am relieved that I can put my poster on the wall and simply stand there, next to my poster, in a clearly defined role. Bruno asks a lot of questions. Who are these organizations? What can the hyperlinks between them tell us about their ‘position’ in the controversy, and about the controversy itself? He does not ask: Why are you showing us a … data visualization? He does not ask: What are you doing, art or social science? He does not ask: If your poster presents a study of public controversy, then why are you doing this work in a graphic design department? Others join us and we have a conversation.

In 1999, to create what we would later call digital controversy maps was to step into an under-defined, interdisciplinary space. Our work at the Jan van Eyck Akademie brought together STS with design research, computing, internet studies and environmental politics, and at the time this did not make much institutional sense. Our work also looks strange. Indeed, how can one show a network visualisation and call it a debate? As it turned out Bruno Latour was strongly supportive of our approach: the development of interdisciplinary methods of inquiry, which combine social science with art and design, became one of his principal occupations in the decades that followed.

Applications are now open for the Digital Methods Winter School and Data Sprint 2023 which is on the theme of “What actually happened? The use and misuse of Open Source Intelligence (OSINT)”.

This will take place on 9-13th January 2023 at the University of Amsterdam. Applications are accepted until 1st December 2022.

More details and registration links are available here and an excerpt on this year’s theme and the format is copied below:

The Digital Methods Initiative (DMI), Amsterdam, is holding its annual Winter School on the ‘Use and Misuse of Open Source Intelligence (OSINT)’. The format is that of a (social media and web) data sprint, with tutorials as well as hands-on work for telling stories with data. There is also a programme of keynote speakers. It is intended for advanced Master’s students, PhD candidates and motivated scholars who would like to work on (and complete) a digital methods project in an intensive workshop setting. For a preview of what the event is like, you can view short video clips from previous editions of the School.

Continue reading

A new article on “Engaged research-led teaching: composing collective inquiry with digital methods and data” co-authored by Jonathan Gray, Liliana Bounegru, Richard Rogers, Tommaso Venturini, Donato Ricci, Axel Meunier, Michele Mauri, Sabine Niederer, Natalia Sánchez-Querubín, Marc Tuters, Lucy Kimbell and Anders Kristian Munk has just been published in Digital Culture & Education.

The article is available here, and the abstract is as follows:

This article examines the organisation of collaborative digital methods and data projects in the context of engaged research-led teaching in the humanities. Drawing on interviews, field notes, projects and practices from across eight research groups associated with the Public Data Lab (publicdatalab.org), it provides considerations for those interested in undertaking such projects, organised around four areas: composing (1) problems and questions; (2) collectives of inquiry; (3) learning devices and infrastructures; and (4) vernacular, boundary and experimental outputs. Informed by constructivist approaches to learning and pragmatist approaches to collective inquiry, these considerations aim to support teaching and learning through digital projects which surface and reflect on the questions, problems, formats, data, methods, materials and means through which they are produced.

Mathieu Jacomy and Anders Munk, TANT Lab & Public Data Lab

6 minutes read

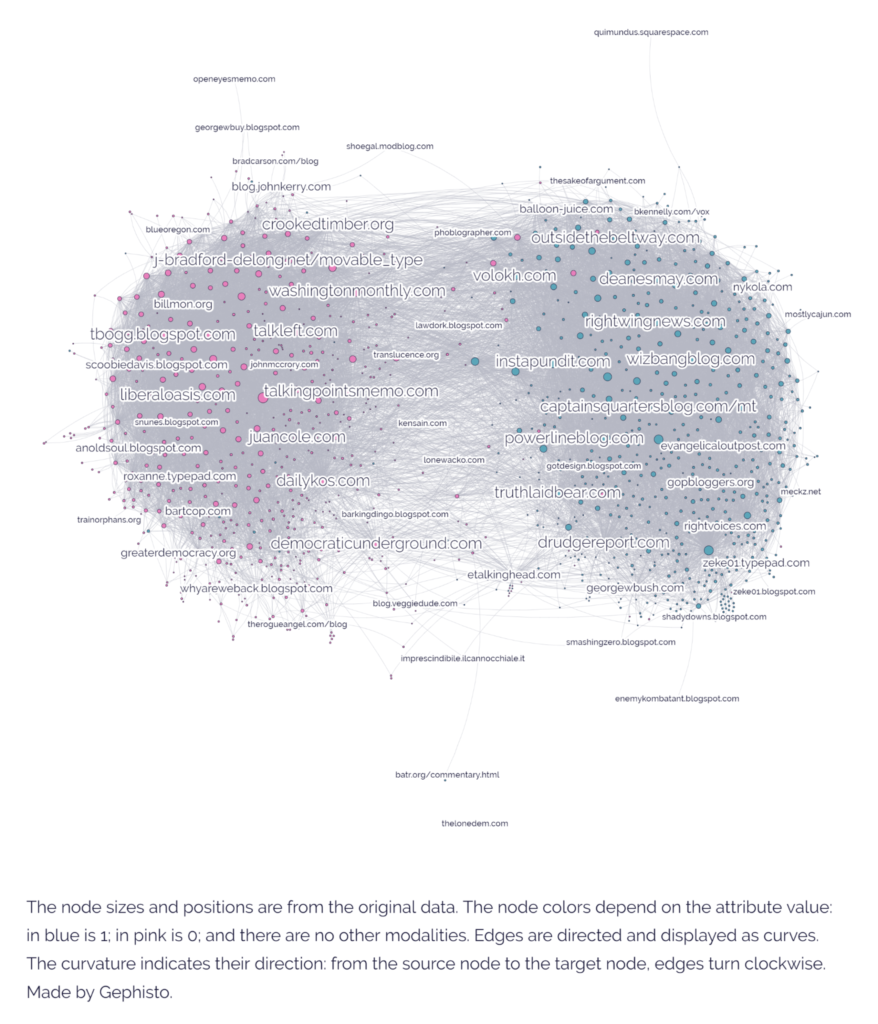

Gephisto is Gephi in one click. You give it network data, and it gives you a visualization. No settings. No skills needed. The dream! With a twist.

Gephisto produces visualizations such as the one above. It exists as a website, and you can just try it below. It includes test networks, you don’t even need one. Do it! Try it, and come back here. Then we talk about it.

https://jacomyma.github.io/gephisto/

Continue reading

Blog post by By Emillie de Keulenaar, Francisco Kerche, Giulia Tucci, Janna Joceli Omena and Thais Lobo [alphabetical order].

Brazilian political bots have been active since 2014 to influence elections through the creation and maintenance of fake profiles across social media platforms. In 2017, bots’ influence and forms of interference gained a new status with the emergence of “bot factories” acting in support of Jair Bolsonaro’s election and presidency. What we call bolsobots are inauthentic social media accounts created to consistently support Bolsonaro’s political agenda over the years, namely Bolsonaro as a political candidate, President, and avatar of a conservative and militaristic vision of Brazilian history, where social discipline, Christian values and a strong but economically liberal state aim to uproot the decadent influence of “socialism” (Messenberg, 2019). From viralising or spreading hashtags to establishing target audiences with pro-Bolsonaro “slogan accounts” with a strong, visual presence, these bots have also been tied to documented disinformation campaigns (Lobo & Carvalho, 2018; Militão & Rebello, 2019; Santini, Salles, & Tucci, 2021). Despite the efforts of social media platforms, including Whatsapp and Telegram, to restrict their more or less coordinated inauthentic activities (Euronews, 2021), bolsobots still exist and actively adapt to online cultures.

Accounting for the upcoming Brazilian 2022 elections, the project Profiling Bolsobots Networks investigates the practices of pro- and anti- Bolsonaro bots across Instagram, Twitter and TikTok. It aims to empirically demonstrate how to capture the operation of bolsobot networks; the types of accounts that constitute bot ecologies; how (differently) bots behave and promote content; how bolsobots change over time and across platforms, pending to different cultures of authenticity; and, finally, how platform moderation policies may impact their activities over time. In doing so, the project will produce a series of research reports on “bolsobot” networks and digital methods recipes to further the understanding of bots’ presence and influence in the communication ecosystem.

We are (so far) a group of six scholars collaborating on this project: Janna Joceli Omena (Public Data Lab / iNOVA Media Lab / University of Warwick), Thais Lobo (Public Data Lab / King’s College London), Francisco Kerche (Universidade Federal do Rio de Janeiro), Giulia Tucci (Universidade Federal do Rio de Janeiro), Emillie de Keulenaar (OILab / University of Groningen) and Elias Bitencourt (Universidade do Estado da Bahia). Below are some of the preliminary outputs of the project.

Continue reading

In this post Jason Chao, PhD candidate at the University of Siegen, introduces Memespector-GUI, a tool for doing research with and about data from computer vision APIs.

In recent years, tech companies started to offer computer vision capabilities through Application Programming Interfaces (APIs). Big names in the cloud industry have integrated computer vision services in their artificial intelligence (AI) products. These computer vision APIs are designed for software developers to integrate into their products and services. Indeed, your images may have been processed by these APIs unbeknownst to you. The operations and outputs of computer vision APIs are not usually presented directly to end-users.

The open-source Memespector-GUI tool aims to support investigations both with and about computer vision APIs by enabling users to repurpose, incorporate, audit and/or critically examine their outputs in the context of social and cultural research.

What kinds of outputs do these computer vision APIs produce? The specifications and the affordances of these APIs vary from platform to platform. As an example here is a quick walkthrough of some of the features of Google Vision API…

Continue reading

A new special issue of the bilingual journal Diseña has just been released. The issue, edited by Gabriele Colombo and Sabine Niederer, explores the realm of online images as a site for visual research and design.

While in an image-saturated society, methods for visual analysis gain urgency, this special issue explores visual ways to study online images. The proposition we make is to stay as close to the material as possible. How to approach the visual with the visual? What type of images may one design to make sense of, reshape, and reanimate online image collections? The special issue also touches upon the role that algorithmic tools, including machine vision, can play in such research efforts. Which kinds of collaborations between humans and machines can we envision to better grasp and critically interrogate the dynamics of today’s digital visual culture?

The articles (available both in English and in Spanish) touch on the diversity of formats and uses of online images, focusing on collection and visual interpretation methods. Other themes touched by this issue are image machine co-creation processes and their ethics, participatory actions for image production and analysis, and feminist approaches to digital visual work.

Further information about the issue can be found in our introduction. Following is the complete list of contributions (with links) and authors (some from the Public Data Lab).

Continue reading